Welcome to the 1st ISVSPP archive. Please click the links below to find out more about this event, its organizers, participants and more!

Organizers || Invited Speakers || Program || Voice Quality: the Laryngeal Articulator, Infant Speech Acquisition, Speech Origins || Photos || Registration

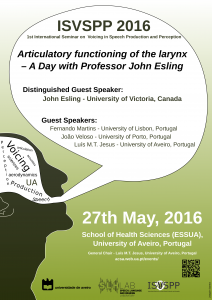

Feel free to download the seminar poster here.

About ISVSPP

The University of Aveiro, Portugal, is pleased to announce that it will be hosting the 1st International Seminar on

Voicing in Speech Production and Perception (ISVSPP 2016) on the 27th of May 2016.

This year’s program theme is “Articulatory functioning of the larynx – A Day with Professor John Esling”and includes the following invited speakers:

- John Esling, University of Victoria, Canada

- Fernando Martins, University of Lisbon, Portugal

- João Veloso, University of Porto, Portugal

- Luís Jesus, University of Aveiro, Portugal

Please check our speakers page to see more information about them.

The ISVSPP seminars aim to bring together senior and junior scientists working in the multidisciplinary field of Speech Production and Perception, ensuring direct and informal interaction between all participants.

The conference program will include keynotes and ample opportunities to discuss participants’ own research. Registration will include lunch and a coffee break.

The venue is the School of Health Sciences at the University of Aveiro‘s Campus de Santiago, overlooking the Aveiro lagoon, which is renowned internationally for its many buildings designed by famous Portuguese architects, only a short distance from the city centre.

Join us for a day with Professor John Esling, THINK speech production and perception for a day, let yourself be engaged and involved in Professor John Esling’s unique lecturing style, with various opportunities for informal discussions with the invited speakers, and perhaps take a short break over the weekend at this unique coastal region we call Aveiro.

International Seminar on Voicing in Speech Production and Perception

University of Aveiro, Portugal

Speech science has long tried to integrate a multitude of factors from physical sciences to formulate empirically based theories about the human communication system and data driven (and/or knowledge based) models of speech production and perception. This seminar attempts to bridge the knowledge gap between the information that we can derive from various speech production data (acoustic, aerodynamic and articulatory measures) and speech perception. The focus will be on the production and perception of voicing in speech, produced by the interaction of two simultaneous sources (i.e., voicing and noise) which have a very different nature. The presence of both phonation and frication in these mixed-source sounds offers the possibility of mutual interaction effects, with variations across place of articulation. The acoustic and articulatory consequences of these interactions are seldom explored and few automatic techniques for finding parametric and statistical descriptions of these phenomena have been proposed so far.

The rationale behind this seminar could be summarised as follows:

- To improve the understanding of aerodynamic, articulatory and acoustic interactions that govern the production principles involved in voicing of speech sounds.

- To deepen our knowledge concerning the different contributions (across languages) of acoustic parameters or auditory features for voicing distinction (perception of voicing).

- To foster a growing cross-disciplinary interest in the subject attested by a large number of publications over the last years.

The expected outcomes and people involved are:

- Fostering new collaborations between researchers from different parts of the world (e.g., Americas, Asia and Europe); contribute to the development of new voicing measures in order to deepen and broaden our phonetic description of the different languages, which will be useful for the automatic classification of speech sounds; the application of extracted features to language specific phonetic description and speech technology (synthesis and recognition).

- To encourage links between senior and junior researchers from scientific disciplines such as: speech and hearing sciences; phonetic sciences; linguistics; electronics and computer sciences; health sciences; mathematics; physics.

Motivation and Rationale

Current understanding of aerodynamic, articulatory and acoustic interactions that govern the production principles involved in voicing of speech sounds, particularly voicing during consonant production, is still limited. Despite the fundamental interaction of voicing mechanisms with supralaryngeal configurations and airflow, the differences in aerodynamic behaviour have rarely been used to investigate voicing in continuous speech productions. Measurements of this kind might lead, in the longer term, to a more in-depth understanding of the conditions required for the maintenance or cessation of glottal vibrations. These measurements have only recently led to a renewed interest for the classical voicing topic. The use of stimuli in a rich variety of contexts (resulting in multiple within-word and across-word interaction effects) reveals details about production mechanisms resulting from real physiological conditions and requirements placed upon the speech system. Qualitatively and quantitatively defining non-modal voicing based on factors more closely related to phone production (laryngeal behaviour) than to the acoustic signal, could facilitate the exploration of relationship between laryngeal activity and biomedical signals. New findings on acoustic correlates of prosody related to voice quality and the role of the subglottal system in vocal fold vibration unveiled novel physiological and acoustical characteristics of the voice source.

The literature is sparse concerning the different contributions (across languages) of acoustic parameters or auditory features for voicing distinction. It is generally agreed that voice onset time (VOT) is the most dominant perceptual cue for voicing distinction in stops. However, analyses of real speech data also show that for a significant number of productions there is no audible release. The missing burst forces the perceptual system to rely on other voicing parameters/cues to extract and perform the given voicing distinction task. For vowels, it has been shown that the perceptual system is not only able to perform certain weighting techniques between cues (i.e., to apply cue-trading) in order to achieve a robust perceptual outcome, but, in addition, this weighting differs across different dialects and languages. For the perception of obstruent voicing, this cue weighting is assumed to be highly language-dependent. While some languages merely rely on the strong cues like VOT, other languages rely on voicing maintenance or closure and vowel duration cues instead. Thus, when comparing different languages, a number of different acoustic parameters have to be taken into account when examining the cue mediation for voicing distinction and the perception of voice quality.